Interactivity x Explainability:

Toward Understanding How Interactivity Can Improve Computer Vision Explanations

* = Authors contributed equally

Overview

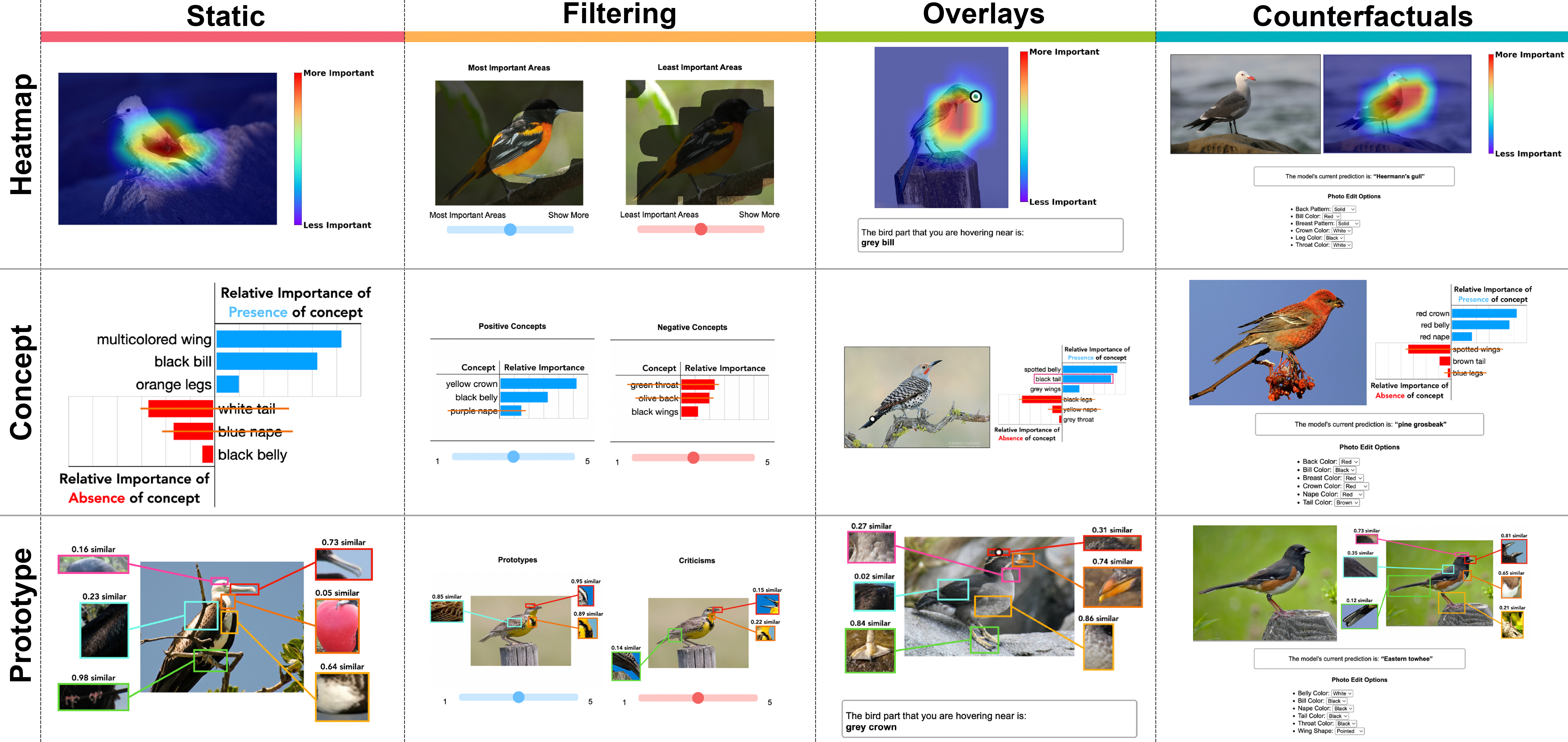

Explanations for computer vision models are important tools for interpreting how the underlying models work. However, they are often presented in static formats, which pose challenges for users, including information overload, a gap between semantic and pixel-level information, and limited opportunities for exploration. We investigate interactivity as a mechanism for tackling these issues in three common explanation types: heatmap-based, concept-based, and prototype-based explanations.

We conducted a study (N=24), using a bird identification task, involving participants with diverse technical and domain expertise. We found that while interactivity enhances user control, facilitates rapid convergence to relevant information, and allows users to expand their understanding of the model and explanation, it also introduces new challenges. To address these, we provide design recommendations for interactive computer vision explanations, including carefully selected default views, independent input controls, and constrained output spaces.

Citation

ACM page available here: Link

@inproceedings{panigrahi2025interactivity,

author = {Indu Panigrahi and Sunnie S. Y. Kim* and Amna Liaqat* and Rohan Jinturkar and Olga Russakovsky and Ruth Fong and Parastoo Abtahi},

title = {{{Interactivity x Explainability}: Toward Understanding How Interactivity Can Improve Computer Vision Explanations}},

booktitle = {Extended Abstracts of the CHI Conference on Human Factors in Computing Systems (CHI EA)},

year = {2025}

}

Acknowledgements

Firstly, we thank our participants for taking the time to participate in our study. We also thank Patrick Newcombe and Fengyi Guo for their help in identifying bird species and images of similar difficulty for the study. Lastly, we thank members of Princeton HCI and the Princeton Visual AI Lab, especially Vikram Ramaswamy, for providing feedback and resources throughout this project.

This material is based upon work supported by the National Science Foundation under grant No. 2145198 (OR) and the Graduate Research Fellowship (SK), as well as the Princeton SEAS Howard B. Wentz, Jr. Junior Faculty Award (OR), the Princeton SEAS Innovation Fund (RF), and Open Philanthropy (RF, OR). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the sponsors.

Contact

Project page template adapted from Sunnie S. Y. Kim.